ELJonline: Embedded Designs Move to Linux and Systems-on-a-Chip

Jul 1, 2001 — by LinuxDevices Staff — from the LinuxDevices Archive — viewsDavid discusses his reasons for picking “x86-on-a-chip” for embedded designs.

The computer is experiencing another of its many transformations. The age of electronic computing began with machines that filled warehouses, cost millions of dollars and were unavailable to anyone other than governments and large corporations. Gradually they evolved from mainframes to minicomputers and then microprocessors. The age of pervasive computing is driving the need for higher levels of integration and lower costs. Products that require an end user who must be skilled in the use of complex software programs or nonintuitive commands have as much chance of succeeding in today's competitive market as an automobile that requires a hand crank to start the engine.

Advances in integrated circuit manufacturing technology have made it possible to have miniaturized computing power that was inconceivable just a few years ago. Now, true single-chip systems with more processing power than was used to launch the first manned missions to the moon are reality. These Systems-on-a-Chip (SOC) bring a level of reliability and flexibility to product design that will dramatically change the nature of the world around us.

By balancing performance, functionality, integration and reliability such devices can be truly green, consuming less than one watt.

The Embedded Market vs. the Desktop

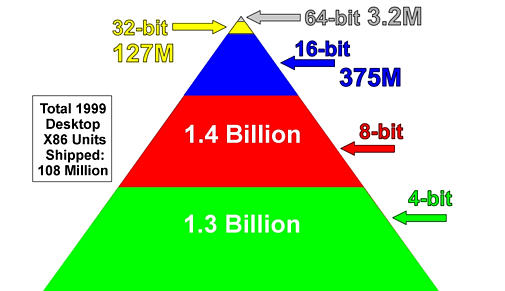

There has been a great deal of discussion recently about the post-PC era. Predictions that the post-PC era began last year or that it is just dawning are made by those who, by and large, are mistaken. A glance at the data regarding the total number of processors shipped since the dawn of the microprocessor will quickly reveal that the desktop PC has been, and continues to be, the proverbial drop-in-the-bucket of computation devices (see Figure 1). Rarely since the invention of the microprocessor has more than 5% of total production gone onto the desktop. Today, ever-increasing numbers of microprocessors are being embedded in products that surround us wherever we go. The past and future of computing has been and will continue to be embedded systems.

Figure 1. Embedded Systems Shipped

The same is true in terms of software. It is not uncommon, when operating systems are being discussed, for the “experts” to casually toss out the “fact” that 85% (or whatever number is currently in vogue) of all computers run on Microsoft software. It stands to reason then, that if less than 5% of the microprocessor hardware shipped is going into personal computers, the vast majority of operating system code must not be desktop-type operating systems. In fact, in numerous studies, by far the most pervasive operating systems have been those termed proprietary.

Why Linux Changes the Equation

OEMs must have control over their own destiny. There are two ways to accomplish this. One is to continue creating proprietary architectures. The worldwide shortage of programmers and time-to-market constraints are making it much harder to justify reinventing the wheel. The best solution by far is to seek out open standards and open architectures. More than 60% of the embedded systems produced in the last four years have used a proprietary operating system. This has been driven by the need to own the source code. Less important than eliminating royalties on a per unit basis has been the need to support products in the field long beyond the life span of the typical desktop OS. Also, there is a very high cost associated with developing and maintaining proprietary software. It is rapidly becoming an overwhelming undertaking when attempting to balance ever-shorter product life cycles and shortages of programmers with increased demands for reliability and ease of use.

OS Software: the Linux Alternative

It is this dilemma that is generating the enormous level of interest in Linux. Leveraging the work of thousands of contributors can cut development time, increase the richness of functionality in products and lower costs while maintaining the proprietary control that is critical to long-term support of mass-market products.

Although nothing comes without a price, the royalty-free nature of the Linux OS makes it particularly attractive as a solution for high-volume applications where the success of a product can hinge on achieving low overall production cost. The modularity, scalability and flexibility of Linux add to its attractiveness as a base from which to begin.

The Challenge for Linux

A change in mindset is imperative. If the goal is to challenge Microsoft Windows for the desktop, then Linux will not succeed in the embedded world. Creeping featurism has hindered Microsoft's ability to penetrate the embedded market, and it will hold Linux back as well.

There are indications that this is understood by the many “embedded Linux” companies that are emerging. The greatest challenge for the Linux developer community will be to address the cost-constrained resources of typical high-volume, embedded internet-connected devices. Small memory footprints, minimal storage and fewer processor cycles are all critical considerations.

Designers must also have the ability to customize the user interface to match the end use of the hardware device. The lower processor speeds and simpler display characteristics in most non-PC internet devices will require a very different approach to user interfaces.

Flexibility in customizing browsers, user interfaces and the look and feel that the end user will experience are critical if the manufacturer is to have the ability to create unique value-added products.

Take a Lesson from Consumer Products

Consumers have grown tired of their VCRs flashing “12:00 A.M.“, mocking their frustration with products designed for individuals with engineering degrees. Consumer products companies spend millions on market research, focus groups and test marketing. They do not rely simply on what consumers say they want. They watch how they use their product, conduct post-sales surveys and constantly fine-tune. Their research tells them that their systems must be intuitive, easy to use and reliable. They know they would sell few VCRs if consumers had to spend 15 minutes with an instruction manual each time they wanted to change the station, or if the television displayed “Error message 4863 — missing file channel.exe”.

Target the Product to the Needs of the User

Foremost in determining the success of a product is ease of use. It doesn't matter whether the end user is a novice or an expert — everyone appreciates a product that has an intuitive interface and is easy to use. Software designers often make assumptions about the level of sophistication of the end users of their products. Everyone knows that generalizing or making assumptions can be dangerous. Even if a product is targeted at a highly educated audience, it is always a good rule of thumb to keep in mind that people have their particular areas of specialization. None of us would board an aircraft knowing that the person who checked out the collision avoidance electronics system was a Nobel prize-winning economist.

Hardware: System-on-a-Chip Alternatives

The ever-increasing number of processor platforms available to the embedded market makes the selection of an appropriate platform hazardous. Although many claim to be SOC, few deliver on their claims.

RISC vs. x86

The x86 has many advantages when compared to RISC devices. The x86 is almost always the first platform supported, due to the existing dominance of this architecture in the desktop.

Also, the legacy x86 peripheral hardware support base will be difficult for RISC to overtake. Ethernet, modems, graphics controllers, HomePNA, etc., are readily available and fully support the x86.

Integrated RISC designs require custom or semi-custom chip development and large investments in design tools. This often makes these designs expensive, time consuming and only available to major corporations.

RISC firmware, OS and application development is longer and requires unique expertise. The shortage of Chip-Level RISC designers often requires the integration to be done by third parties.

The standards created by the dominance and volumes generated by the IBM PC make the x86 architecture the only processor that delivers many of the requirements that should be considered when selecting the processor platform for an embedded application. In selecting a platform, whether at the semiconductor component level or at the board level, look for products that encompass the following:

- Design for embedded applications with features required for enhanced reliability.

- Comprehensive development tools.

- Wide choice of operating systems to allow greater flexibility, lower cost and firmware support for many of the chips required to complement the design.

- High levels of integration to minimize chip count and interconnect points to drive system reliability up.

- Production guarantees that are in tune with the embedded marketplace cycle time to minimize component obsolescence.

- Extensive assortment of low-cost peripherals with readily available driver software.

- Well-documented x86 architecture with standard interfaces such as ISA, PCI, I2C, USB, etc., to allow seamless interconnection.

- Compatibility with off-the-shelf application software, so user interfaces can be designed quickly with a familiar look and feel.

- Low power consumption to permit long battery life and harsh environments where airflow for heat dissipation is restricted.

- Redundant systems for virus immunity and crash recovery.

Standards: Risks and Rewards

It is tempting to use standards because of the greatly shortened time-to-market deliveries they can bring to a development project. However, decisions regarding which technologies to use require a discriminating thought process. Product designers must strike a balance between time-to-production constraints, overall system cost and ease of use for the end user.

The desktop architecture brings significant advantages to overall system cost in terms of hardware and software. The almost 20-year legacy of the Wintel PC has created significant breakthroughs in processing power, hardware and software development tools, and the ability to piggyback on some of the economies of scale that the desktop market has driven. Component costs for key peripherals have been driven down significantly. This makes it possible for designers to shorten schedules and include features and options in their systems that would have been out of the question in the past.

The same desktop market influences that have brought these advantages also bring significant risks. The first is component obsolescence. The fast-moving pace of the desktop market causes both hardware and software technologies to become obsolete at a pace that far exceeds the requirements of achieving adequate return on investment in the embedded world. The software of the desktop has ballooned to a point that makes it unreliable and unwieldy in most embedded applications. The level of complexity and massive size of desktop programs are only possible because microprocessor manufacturers remain locked in a megahertz race. Increases in memory capacity have only added to the problem. More megahertz typically means higher total system cost in the form of additional overhead, more components, more heat to dissipate and more code to debug. Moore's Law is only possible in a pure horsepower play. Ultra high performance is rarely associated with stability and reliability. Remember, a race car only has to last to the end of one race. Consumer products have to work reliably until we tire of them.

Seek a Balance

Automobile manufacturers have managed to create vehicles that are better for the environment and provide consumers with increased driving pleasure and greater safety. They have done this by creating technology that strikes a balance between performance and functionality. These vehicles are lighter and more aerodynamic. Smaller engines that consume less fuel and emit fewer pollutants power them. A clear indicator of a mature industry is one that is no longer enamored with its ability to innovate merely to prove that it can. Innovations in the automobile industry are driven by consumer demands, expediency and government regulation.

Design Examples

To illustrate these concepts in a practical application, here are two examples of systems constructed using the concepts discussed in this article.

The first is an internet appliance/thin-client design that uses standard buses to interconnect readily available PC peripheral chips to an x86 SOC (see Figure 2). The operating system is Linux with a reduced footprint browser.

The second example is a design completed by Tri-M Systems, a manufacturer of embedded electronics in Vancouver, BC. This is a PC/104 internet control computer that uses standard buses to add the analog devices needed to sense the conditions in the system (see Figure 3). Using the Linux operating system and a simple browser allows communication to the external world using HTML pages.

Tri-M designed its latest two PC/104 boards around a single-chip PC and selected the most highly integrated device they could find to allow a substantially reduced component count and cost reduction in board real estate. The high level of integration saved cost in the total bill of materials, reduced assembly costs, increased mean time between failure figures and is expected to reduce costs for warranty and service support. This device was also selected for its power efficiency — even at its top operating speed of 133MHz, it consumes about a half of a watt. Less power consumption means less heat, less engineering to dissipate heat and longer battery operation life. However, the main reason for the selection of the x86 architecture is the hardware and software compatibility. The ready availability of PC hardware, software and device drivers dramatically reduced time-to-market and made the product both backward and forward compatible with the rest of the PC/104 devices on the market. The reduced time to production obtained by using a standard architecture is evident in the time required to design two separate boards. The first design, although a very complex ten-layer CPU board, was completed in six weeks from start to prototype. The second design, using the MachZ PC-on-a-Chip (recently changed to ZFx86), took less than three days for a full six-layer PC/104 design from start to prototype. This reuse of standard hardware architecture and software drivers effectively enables Tri-M to offer its customers customized solutions in record time.

About the author: David L. Feldman is CEO of ZF Micro Devices, Inc., Palo Alto, California, supplier of embedded systems and the first FailSafe PC-on-a-Chip. The company was founded in April 1995. In 1983 Mr. Feldman created the 5¼ form factor for embedded computers. He was the founder and former chief executive of Ampro Computers and the creator of the PC/104 concept. He has more than 20 years of experience in the embedded systems market.

About the author: David L. Feldman is CEO of ZF Micro Devices, Inc., Palo Alto, California, supplier of embedded systems and the first FailSafe PC-on-a-Chip. The company was founded in April 1995. In 1983 Mr. Feldman created the 5¼ form factor for embedded computers. He was the founder and former chief executive of Ampro Computers and the creator of the PC/104 concept. He has more than 20 years of experience in the embedded systems market.

Copyright © 2001 Specialized Systems Consultants, Inc. All rights reserved. Embedded Linux Journal Online is a cooperative project of Embedded Linux Journal and LinuxDevices.com.

This article was originally published on LinuxDevices.com and has been donated to the open source community by QuinStreet Inc. Please visit LinuxToday.com for up-to-date news and articles about Linux and open source.