Building an Ogg Theora camera using an FPGA and embedded Linux

Mar 23, 2005 — by LinuxDevices Staff — from the LinuxDevices Archive — 29 viewsForeword: This article introduces a network camera based on embedded Linux, an open FPGA, and a free, open codec called Ogg Theora. Author Andrey Filippov, who designed the camera, says it is the first high-resolution, high frame-rate digital camera to offer a low bit rate. Enjoy . . . !

Background

Most of the Elphel cameras were first introduced on LinuxDevices.com, and the new Model 333 camera will follow this tradition. The first was the Model 303, a high speed gated Intensified camera that used embedded Linux running on an Axis ETRAX100LX processor. The second — the Model 313 — added the high performance of a reconfigurable FPGA (field programmable gate array), a 300K-gate Xilinx Spartan-2e that was capable of JPEG encoding 1280×1024 @ 15fps. This rate was later increased to 22 fps, at the same time that a higher-resolution Micron image sensor provided 1600×1200 and 2048×1536 options. FPGA-based hardware solutions are very flexible, enabling the same camera main board to later be used without any modifications in a very different product — the Model 323 camera. The Model 323 has a huge 36 x 24mm Kodak CCD sensor, the KAI-11000, which supports 4004 x 2672 resolution for true snapshot operation.

What should the ideal network cameras be able to do?

The most common application for network cameras is video security. In order to take over from legacy analog systems, digital cameras must have high resolution, so that there will be no need to use rotating (PTZ) platforms, which can easily miss an important event by looking in a different direction at the wrong moment. But high resolution combined with high frame rate (which was already available in analog systems) produces huge amounts of video data that can easily saturate the network with just a few cameras. It also requires too much space on the hard drives for archiving. However, this is only true when using JPEG/MJPEG compression — true video compression can make a big difference.

So, the “ideal” network camera should combine three features: high resolution, high frame rate, and low bit rate.

No currently available cameras provide all three of these features at once. Some have low bit rate (i.e. MPEG-4) and high frame rate, but the resolution is low (same as analog cameras). Some have high resolution and high frame rate (including the Elphel 313), but the bit rate is high. This is so because the real-time video encoding for high-resolution data is a challenging task — and it needs a lot of processing power.

An FPGA that can handle the job

The FPGA in the model 313 has 98 percent utilization, so not much can be added. But after that camera was built, more powerful FPGAs have become available. The new model 333 camera uses a million-gate Xilinx Spartan 3, which has three times more general resources, is faster, and has some useful new features (including embedded multipliers and DDR I/O support) — all in the same compact FT256 package as the previously used Spartan 2e.

As soon as support for this FPGA was added to Xilinx's free-for-download (I hope that one day they will release really Free software, not just free “as in beer”) WebPack tools, I was ready for the new design. Support by free development software is essential for our products, as they come with all the source code (including the routines for the FPGA, written in Verilog HDL) under GNU/GPinterL. We want our customers to be able to hack into — and improve — our designs.

Hardware details

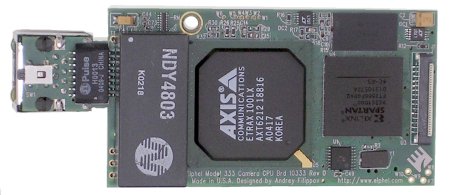

The model 333 electronic design was based on that of the previous model, and has the same CPU and center portion of the rather compact PC board layout, where the data bus connects processor and memory (SDRAM and flash) chips to each other. In addition to the FPGA, all the memory components were upgraded; Flash is now 16MB (instead of 8MB), and system SDRAM is 32MB (instead of 16MB). The dedicated memory that connects directly to the FPGA is also twice as large, and it is also more than twice as fast — it is DDR. That memory is a very critical component for video compression, since each piece of data goes through this memory three times while being processed by the FPGA.

Despite these upgrades, the size of the board did not increase. Actually, I was even able to shrink the board a little. So, while the outside dimensions remain the same — 3.5 by 1.5 inches — the corners of the board near the network connector are cut out, so that the RJ-45 connector fits into a sealed shell, making the camera suitable for outdoor applications without requiring an external protective enclosure.

Model 333 camera main board

(Click to enlarge)

The Model 333, in a Model 313 case — the production version will use a weatherproof case

(Click to enlarge)

The right codec for the camera

While working on the hardware design, I didn't get involved in the video compression algorithms — I just estimated that something like p-frames of MPEG-2 can make a big difference with the fixed-view cameras, where most of the image is usually a constant background. And the next level of compression efficiency, motion compensation, needs higher memory bandwidth than is available in this camera, so I decided to skip it in this design, and leave it for future upgrades.

In August 2004, I had the first new camera hardware tested, and I ported the MJPEG functionality from the model 313 camera. It immediately ran faster — 1280 x 1024 @ 30fps, instead of 22fps — with frame rates limited by the sensor. I ordered a book about MPEG-2 implementation details. Only then did I discover that use of this standard (in contrast to JPEG/MJPEG) requires licensing. The license fee is reasonable, and rather small compared to the hardware costs, but being an opponent of the idea of software patents, I didn't want to support it financially.

That meant that I had to look for an alternative codec, and it didn't take long to find a better solution — both technically, and from a licensing perspective. It is Theora, developed by the Xiph.org Foundation. The algorithm is based on VP3, by On2 Technologies, who has granted an irrevocable royalty-free license to use VP3 and derivatives. Theora is an advanced video codec that competes with MPEG-4 and other low bit-rate video compression technologies.

FPGA implementation of the Theora encoder

At that point, I had both hardware to play with, and a codec to implement. As it turned out, the documentation is quite accurate. Being the first one to re-implement the codec from the documentation, I ran into just a single error, where there was a mismatch between the docs and the standard software implementation.

Even with a number of shortcuts I made (unlike a decoder, an encoder need not implement all possible features), it was still not an easy task. I omitted motion vectors and the loop filter, and still the required memory bandwidth turned out to be rather high — with FPN (fixed-pattern noise) correction enabled, the total data rate was about 95 percent of the theoretical bandwidth of the SDRAM chip I used (500MB/sec @ 125MHz clock). For each pixel encoded, the memory should:

- Provide FPN correction data (2 bytes)

- Receive corrected pixel data and store it in scan-line order (1 byte)

- Provide data to the color converter (Bayer->YCbCr 4:2:0) that is connected to the compressor. Data is sent in 20×20 overlapping tiles for each 16×16 pixels, so each pixel needs (400/256)~=1.56 bytes

- For the INTER frames, reference frame data (that is subtracted from the current frame) is needed, and each frame produces a new reference frame. That gives 2*1.5=3 bytes more (1.5 is used because in YCbCr 4:2:0 encoding each 4 sensor pixels provide 2 intensity values (Y) and one of each color components (Cb and Cr)

And that is not all. In the Theora format, quantized DCT components are globally reordered before being sent out — first, go all the DC components (average values of 8×8 blocks); then, both luma (Y) and chroma (Cb and Cr); then, all the rest, the AC components (from lower to higher spatial frequencies), each in the same order. And, as the 8×8 DCT processes data in 64 pixel blocks, yielding all 64 coefficients (DC and 63 AC) together, the whole frame of coefficient data has to be stored before reaching the compressor output. As this intermediate data needs 12 bits per coefficient, it gives 2*1.5*(12/8) ~= 4.5 bytes per pixel more.

The total amount of data to be transferred to/from SDRAM is 12.11 bytes per each pixel; and, as 1280×1024 at 30fps corresponds to an average pixel rate of 39.3MPix/sec, 476MB/sec of bandwidth is needed. So, it could fit in the 500MB/sec available. But normally, such efficiency in SDRAM transfer is achieved only when the data is written and read continuously; so, it is easy to organize bank interleaving such that the activation/precharge operations on other banks is hidden while the active bank is sending or receiving data.

Here, the task was more complicated, especially when writing and reading intermediate data quantized DCT coefficient as 12-bit tokens, since the write and read sequences are very orthogonal to each other — tokens that are close while being written are very far while being read out (and vice versa). “Very far” in this case means much farther than can be buffered inside the FPGA — the chip has 24 of 2KB embedded memory blocks.

All this made the memory controller design one of the trickiest parts of the system. Yet, it is a job that an FPGA can handle much better than many general purpose SDRAM controllers, since the specially designed data structures can be “compiled” into the hardware.

After the concurrent eight-channel DDR SDRAM controller code was written and simulated, the rest was easier. The Bayer-to-YCbCr 4:2:0 conversion code was reused from the previous design, and DCT and IDCT were designed to follow exactly the Theora documentation. Each stage uses embedded multipliers available in the FPGA that run on twice the YCbCr pixel clock (now 125MHz), so only four multipliers are needed. The quantizer and dequantizer use the embedded memory blocks to store multiplication tables prepared by the software in advance, according to the codec specs.

The DC prediction module is a mandatory part of the compressor that uses an additional memory block to store information from the DC components of the blocks in the previous row. Based on this approach, with one block, it is possible to process frames as wide as 4096 pixels. Output from this module is combined with the AC coefficients, and they are all processed in reverse zig-zag order, to extract zero runs to and prepare tokens to be encoded to the output data. Because this is done in a different order, these 12-bit tokens are first stored in the SDRAM.

When the complete frame of tokens is stored in SDRAM, the second encoder stage starts while the first stage is processing the next acquired frame. Tokens are read to the FPGA in the “coded” order. The outer loop goes through DCT coefficients — starting from DC, then AC — from the lowest to the highest spatial frequencies. For each coefficient, index color planes (Y, Cb and Cr) are iterated, for each plane. Superblocks (32×32 pixel squares) are scanned row first, left to right, then bottom to top. And, in each superblock, 8×8 pixel blocks are scanned in Hilbert order (for 4×4 blocks this sequence looks like an upper-case omega with a dip at the very top).

It is very likely that multiple consecutive blocks will have only zeros for all AC coefficients. These zero runs are combined in special EOB (end of block) tokens — that could not be done in the first stage, since at that point the neighbors in the coded order were processed far apart in time.

Now all the tokens — both the DCT coefficient ones received from the SDRAM, and the newly calculated EOB runs — are encoded using Huffman tables (individual for color planes and for the groups of coefficient indices). The tables themselves are loaded into the embedded memory block by the software before the compression. The resulting variable-length data is consolidated into 16-bit words, buffered, and later sent out to system memory using 32-bit wide DMA.

Results, credits and plans

At this point, the very basic software has been developed, and the most obvious bugs in the FPGA implementation of the Theora encoder have been found and fixed. The camera has been successfully tested with a 1280×1024 sensor running at 30 fps (the camera can also run with a 2048×1536 sensor at 12fps, and can accommodate future sensors up to 4.5MPix).

Basically, the current software was developed to serve as a test bench for the FPGA. It does not have any streamer yet — the short hardware-compressed clips (up to 18MB) are stored in the camera memory, and then later sent out as an Ogg-encapsulated file. I do not think it will take long to implement a streamer — there is a team of programmers that came together nearly a year ago when Elphel announced a software competition for the best video streamer for the previous JPEG/MJPEG model 313 camera in a Russian online magazine, Computerra. Thanks to that effort, the model 313 now has seven alternative streamers, some running as fast as 1280×1024 at 22fps (FPGA limited) and sending out up to 70Mbps (that rate is needed only for very high JPEG quality settings).

The winner of that competition — Alexander Melichenko (Kiev, Ukraine) — was able to create the first version of his streamer before he even got the camera from us. ftp and telnet access to the camera over the Internet was enough to remotely install, run, and troubleshoot the application for the GNU/Linux system, which ran on a CPU he had never experienced previously (an Axis Communications ETRAX 100LX).

Sergey Khlutchin (Samara, Russia) customized the Knoppix Live CD GNU/Linux distribution, enabling our customers who normally use other operating system to see the full capabilities of the camera. Apple's Quicktime player does a good job displaying the RTP/RTSP videostream that carries MJPEG from the camera, but we could not figure out how to get rid of the three second buffering delay of that proprietary product. And Mplayer — well, it seems to feel better when launched from GNU/Linux.

And this is the way to go for Elphel. We will not wait for the day when most of our customers are using FOSS (free and open source software) operating systems on their desktop. Thanks to Klaus Knopper, we can ship the Knoppix Live CD system with each of our cameras, including the new Model 333, which is the first network camera that combines high resolution, high frame rate, and low bit rate — and produces Ogg Theora video.

Afterword

According to Filippov, high resolution, high-frame rate, low-bit video does present one challenge, at least for now — finding a system fast enough to decode the ouput at full resolution and full frame rate. Filippov has asked LinuxDevices readers with fast systems (such as dual-processor 3.6GHz Xeon systems) to download sample files and email success reports. He hopes to demonstrate the camera at an upcoming trade show, and is hoping to gauge how fast a system he'll need.

Says Filippov, “The decoders are not optimized enough yet (maybe the camera will somewhat push developers). Just today there was a posting with a patch that gives an 11 percent improvement. And, I hope that cheaper multi-core systems will be available soon. Finally, we could record full speed/full resolution video on the disk (to be able to analyze some videosecurity event later in detail), but render real-time (for the operator watching multiple cameras) with reduced resolution. It is possible to make software that will use abbreviated IDCT with resolution 1/2, 1/4 or 1/8 of the original. In the last case, just DC coefficients are needed — no DCT at all. For JPEG, such functions are already in libjpeg, and similar things can be done with Theora.”

About the author: Andrey N. Filippov has a passion for applying modern technologies to embedded devices, especially in advanced imaging applications. He has over twenty years of experience in R&D, including high-speed high-resolution, mixed signal design, PDDs and FPGAs, and microprocessor-based embedded system hardware and software design, with a special focus on image acquisition methods for Laser Physics studies and computer automation of scientific experiments. Andrey holds a PhD in Physics from the Moscow Institute for Physics and Technology. This photo of the author was made using a Model 303 High Speed Gated Intensified Camera.

About the author: Andrey N. Filippov has a passion for applying modern technologies to embedded devices, especially in advanced imaging applications. He has over twenty years of experience in R&D, including high-speed high-resolution, mixed signal design, PDDs and FPGAs, and microprocessor-based embedded system hardware and software design, with a special focus on image acquisition methods for Laser Physics studies and computer automation of scientific experiments. Andrey holds a PhD in Physics from the Moscow Institute for Physics and Technology. This photo of the author was made using a Model 303 High Speed Gated Intensified Camera.

A Russian translation of this article is available here.

This article was originally published on LinuxDevices.com and has been donated to the open source community by QuinStreet Inc. Please visit LinuxToday.com for up-to-date news and articles about Linux and open source.