How many bits are really needed in the image pixels?

Feb 6, 2006 — by LinuxDevices Staff — from the LinuxDevices Archive — 58 viewsModern CMOS image sensors provide high resolution digital output of 10 to 12 bits. When CCD sensors are used, the same (or higher) resolution is provided by separate ADC or integrated CCD signal processors. This resolution is significantly higher than that used in popular image and video formats. Most are limited to only 8 bits per pixel, or 8 bits per color channel, depending on the image format. To catch up with the sensor and provide higher dynamic range, many cameras switch to formats that support a higher number of bits per pixels, and some of them use uncompressed, full (sensor) dynamic range raw data. Such formats use significantly more storage space, and consume more bandwidth for transmission.

Do these formats always preserve more of the information registered by the sensors? If the sensor has 12-bit digital output, does that mean that when using 8-bit JPEG, the four least significant bits are just wasted, and therefore a raw format is required to preserve them?

In most cases the answer is “no”. With sensor technology advances, sensor pixels get smaller and smaller, approaching the natural limit of the light wavelength. It is now common to have pixels of less than 2×2 microns, as in the sensors used in mobile phone cameras. One consequence is reduced Full Well Capacity (FWC) — the maximal number of electrons that each pixel can accommodate without spilling them out. This is important because of the Shot Noise — variations of the number of electrons. (There is always an integer number of them, as there can be no ½ electron). Shot noise is caused by the quantum nature of electric charge itself. There is no way to eliminate or reduce it for the particular pixel measurement. This noise is proportional to the square root of the total number of electrons in a pixel, so it is highest when the pixel is almost full.

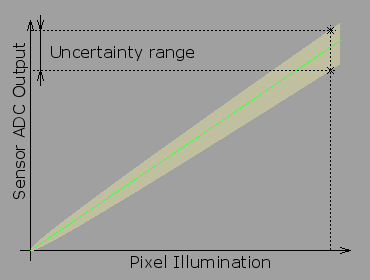

Pixel output uncertainty caused by the shot noise

If we try to keep track of the pixel value in ideal conditions, with the same illumination, and the same camera settings, that measured pixel value will change from frame to frame. The image above shows what happens with a hypothetical sensor with an FWC of just 100. In real sensors, the uncertainty is smaller, but it can still significantly reduce the amount of received information. Our measurements show that a typical Micron/Aptina 5 Megapixel sensor (MT9P031) with 2.2×2.2 micron pixels has a FWC of ≈8500 electrons. As a result, when the sensor is almost full, the pixel value would fluctuate as 8500±92 or more than±1%. Such fluctuation corresponds to 44 counts of the 12 bit sensor ADC.

So is there any real need for such high resolution ADC when some 5-6 LSBs don't carry any real information? Yes, because modern sensors have a very low readout noise, and in the dark, a single ADC count is meaningful. The square root of zero is zero. (Actually, even in the pitch black, there are some thermal electrons that reach the pixels, even with no light at all.) With ADC counts having a different information payload for small and large signals, it is possible to recode the pixel output equalizing the information values of the output counts.

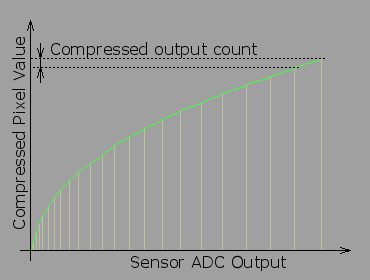

Non-linear conversion of the sensor output

This non-linear conversion assigns incremental numbers to each pixel level that can be distinguished from the previous one in a single measurement, so for small signals in the dark, each next ADC output value receives the next value, increasing to more than 40 ADC counts per number for large signals. Such conversion significantly reduces the number of output values, and therefore the number of bits required to encode them, without sacrificing much of the pixel values. The form below calculates the effective number of bits for different sensor parameters, and the ratio of the encoder step to the noise value for that output level.

| ADC resolution | bits |

| Sensor Full Well Capacity | e- |

| Sensor readout noise | e- |

| Step-to-noise ratio | |

| Number of distinct levels | |

| Effective number of bits | |

Optimal encoding and gamma correction

Luckily enough nothing has to be done to utilize the non-linear encoding optimal for maintaining constant noise to output count ratio as described above.

All cameras incorporate some kind of a gamma correction in the signal path. Historically it was needed to compensate for the non-linear transfer function of the electron guns used in CRT monitors. Cameras had to apply a non-linear function so the two functions applied in series (camera and display) were providing an image that was perceived to have contrast close to that of the original. With LCD displays, this is no longer required, but gamma correction (or gamma compression) also does a nice job of transferring a higher dynamic range signal, even when the signal itself is converted into digital format. Different standards use slightly different values for gamma, usually in the range of 0.45-0.55 on the camera side. Compression with gamma=0.5 is exactly the same as the square root function shown above, optimal for encoding in the presence of the shot noise. With this kind of gamma encoding, an FWC of several hundred thousands electrons is needed to have 12 bits of meaningful data per pixel in the image file. Such high FWC values are available only in the CCD image sensors with very large pixels.

Measuring the sensor FWC

Earlier, I noted that our Aptina sensors have FWC≈8500e-. Such data was not provided by the manufacturer; we measured it ourselves. The FWC value is important because it influences the camera performance, so by measuring the camera performance it is possible to calculate the FWC. The same method may be applied to most other cameras, as well. First, one needs to control the ISO settings (gain) of the camera and acquire a long series of completely out of focus images. (Remove the lens if possible; if not, completely open the iris and use a uniform target.) ISO (gain) should be set to minimum, and exposure adjusted so that the area to be analyzed has a pixel level close to maximal. Use natural lighting or DC-powered lamps/LEDs to minimize flicker caused by AC power. If it is a color sensor, use green pixels only (or with incandescent lamps, red). This will provide the minimal gain. Then measure the differences between the same pairs of pixels in multiple frames. (We used 100 pairs in 100 frames.) Then find the root mean square for the differences in each pair, and divide by the square root of 2 to compensate for using differences in pairs, not the pixel values. (Pairs make this method more tolerant to fluctuation of the total light intensity and to some uncontrolled parameter changes in the camera). If it is possible to turn off gamma correction, the ratio of the pixel value to the measured root mean square of the variation will be equal to the square root of the number of electrons. If the gamma-correction cannot be controlled, one may assume it is around 0.5.

This article was originally published on LinuxDevices.com and has been donated to the open source community by QuinStreet Inc. Please visit LinuxToday.com for up-to-date news and articles about Linux and open source.