Nvidia boards target high-performance computing

Jan 26, 2010 — by LinuxDevices Staff — from the LinuxDevices Archive — 2 viewsNvidia announced parallel processors for the high performance computing (HPC) market, based on its GPU (graphics processing unit) architecture. The Tesla C2070 and C2050 are PCI Express x16 cards touted as “transforming a [Windows or Linux] workstation to perform like a small cluster,” with up to 640 Gigaflops of performance.

Nvidia's C2070 and C2050 harness Nvidia's "next-generation" GPU architecture, code-named "Fermi," which was first announced last October (see later in this story for background). Employing the GPUs for general-purpose parallel computing, the devices do not run Windows or Linux directly but, instead, are designed to run C, C++, OpenCL, DirectCompute, or Fortran while a workstation's CPU performs other tasks, according to the company.

Nvidia's Tesla C2070/C2050

(Click to enlarge)

The C2070 and C2050 are PCI Express Gen2 cards that occupy two slots in a workstation, and include either 3GB or 6GB of onboard GDDR5 memory, respectively. Nvidia claims the cards' onboard GPUs offer performance that's equivalent to the latest quad-core CPUs, but with 1/20th the power consumption and 1/10th the cost.

According to Nvidia, the C2070 and C2050 offer from 520 to 640 Gigaflops of double precision performance, allowing applications such as ray tracing, 3D cloud computing, video encoding, database search, data analytics, computer-aided engineering, and virus scanning to be performed "dramatically faster." Four of the boards may be placed into a 1U enclosure that quadruples performance for data center deployments, the company adds.

Nvidia says the boards support the next-generation IEEE 754-2008 double-precision floating point standard. Providing ECC (error correction code) memory for their DRAM, shared memory, L1/L2 caches, and shared memory, they support PCI Express 2.0, for "fast and high-bandwidth communication between CPU and GPU," the company adds.

Specifications provided for the Tesla C2070 and C2050 by Nvidia include:

- Memory — 3GB DDR5 on C2050; 6GB DDR5 on C2070

- Double-precision floating point performance (peak) - 520 GFlops to 630 GFlops

- Form factor — Dual-slot PCI Express x16; 9.75 x 4.376 inches

- Power consumption - 190 Watts (typical)

- Software development tools — Cuda C/C++/Fortran, OpenCL, DirectCompute Toolkits

- Supported operating systems - Linux, Windows XP, Windows Vista

Background on Nvidia's Fermi

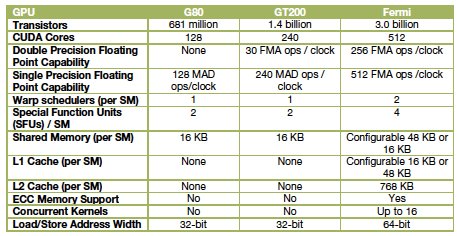

Fermi, announced last October at Nvidia's inaugural GPU Technology Conference in San Jose, amounts to a third generation of products embodying the company's "GPU computing" model. The first generation was the G80 unified graphics/computing architecture, introduced in November 2006 and later embodied in the GeForce 8800, Quadro FX 6500, and Tesla C870 GPU products. The G80 was the first GPU to replace separate vertex and pixel pipelines with a single unified processor, the first to utilize a scalar thread processor, and the first to support C, according to the company.

The second generation was the GT200, introduced in the GeForce GTX 280, Quadro FX 5800, and Tesla T10 GPUs. GT200 increased the number of streaming processor cores — subsequently referred to as "Cuda" cores — from 128 to 240. It also added "hardware memory access coalescing," improving memory access efficiency, along with double-precision floating point support, Nvidia says.

A comparison of Nvidia GPU generations

Source: Nvidia

Fermi, implemented in a GPU containing more than three billion transistors, more than doubles the number of Cuda cores, organizing them into 16 SMs (streaming multiprocessors) with 32 cores apiece. Sporting up to 6GB of GDDR5 RAM, Fermi is the first product of its type to support ECC (error correcting code), the company says.

In October, Nvidia cited the following additional features for Fermi:

- 512 Cuda cores, with the new IEEE 754-2008 floating-point standard, surpassing even the most advanced CPUs

- 8x the peak double-precision arithmetic performance of the GT200

- Nvidia Parallel DataCache, a cache hierarchy that speeds up algorithms such as physics solvers, raytracing, and sparse matrix multiplication where data addresses are not known beforehand

- Nvidia GigaThread engine, supporting concurrent kernel execution, where different kernels of the same application context can execute on the GPU at the same time (eg, PhysX fluid and rigid body solvers)

According to Nvidia, Fermi-based products are so powerful that they can now be termed CGPUs (computational graphics processing units), and are suitable for high-performance computing (HPC) applications such as linear algebra, numerical simulation, and quantum chemistry. At Nvidia's GPU Technology Conference, Oak Ridge National Laboratory (ORNL) announced plans to build a new supercomputer that will employ the Fermi architecture, and also announced it will be creating the Hybrid Multicore Consortium, whose goals "are to work with the developers of major scientific codes to prepare those applications to run on the next generation of supercomputers built using GPUs."

Not surprisingly, Nvidia also said it will use Fermi in its next-generation GeForce, Quadro, and Tesla GPUs, along with graphics cards (right) that employ them. The first Fermi-based graphics card was rumored to be the GT300 (right), a 40nm part that will support Microsoft's DirectX 11.

Not surprisingly, Nvidia also said it will use Fermi in its next-generation GeForce, Quadro, and Tesla GPUs, along with graphics cards (right) that employ them. The first Fermi-based graphics card was rumored to be the GT300 (right), a 40nm part that will support Microsoft's DirectX 11.

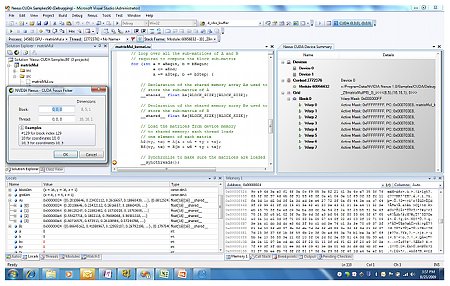

According to Nvidia, Fermi is the first product of its type that supports C++, complementing existing support for C, Fortran, Java, Python, OpenCL and DirectCompute. Fermi also supports Nexus (below), touted as "the world's first fully integrated heterogeneous computing application development environment within Microsoft Visual Studio."

Nvidia's Nexus

(Click to enlarge) Source: Nvidia

Nexus is composed of the following three components, according to Nvidia:

- The Nexus Debugger, a source code debugger for GPU source code, such as CUDA C, HLSL and DirectCompute. It supports source breakpoints, data breakpoints and direct GPU memory inspection. All debugging is performed directly on the hardware.

- The Nexus Analyzer, a system-wide performance tool for viewing GPU events (kernels, API calls, memory transfers) and CPU events (core allocation, threads and process events and waits) on a single, correlated timeline.

- The Nexus Graphics Inspector, which provides developers the ability to debug and profile frames rendered using APIs such as Direct3D. Developers can pause an application at any time and inspect the current frame, examining draw calls, textures, and vertex buffers, the company says.

Dr. Wen-mei Hwu, an electrical and computer engineering professor from the University of Illinois at Urbana-Champaign, is quoted as saying, "There can be no doubt that the future of computing is parallel processing, and it is vital that computer science students get a solid grounding in how to program new parallel architectures. GPUs and the Cuda programming model enable students to quickly understand parallel programming concepts and immediately get transformative speed increases."

Availability

According to Nvidia, the Tesla C2070 and C2050 will be available during the second quarter, retailing for approximately $4,000 and $2,500, respectively. More information on the boards may be found on the company's website, here.

More information on Nexus may be found on the Nvidia website, here. Meanwhile, overall background on Fermi, including a downloadable white paper, may be found here.

This article was originally published on LinuxDevices.com and has been donated to the open source community by QuinStreet Inc. Please visit LinuxToday.com for up-to-date news and articles about Linux and open source.