Nvidia upgrades toolkit for GPU programming

Nov 23, 2010 — by LinuxDevices Staff — from the LinuxDevices Archive — 1 viewsNvidia announced the availability of the production release of its CUDA (compute unified device architecture) Toolkit 3.2. This provides performance increases, new math libraries, and advanced cluster management features for developers creating next-generation applications accelerated via GPUs (graphics processing units), the company says.

CUDA is the computing engine in Nvidia GPUs (graphics processing units), accessible via standard programming languages and claimed to provide dramatic increases in computing performance. The CUDA Toolkit includes all the tools, libraries and documentation developers need to build CUDA C/C++ applications, and is the foundation for many other GPU computing language solutions, the chipmaker says.

New features and significant enhancements in Toolkit version 3.2 are said to include up to 300 percent performance improvement in CUDA Basic Linear Algebra Subroutines (BLAS, and CUDA BLAS is known as CUBLAS) library routines, delivering eight times faster performance than the latest Intel MKL (Math Kernel Library). CUDA Fast Fourier Transform (known as CUFFT) library optimizations deliver between two to 20 times faster performance than the latest MKL, Nvidia adds.

Moreover, Nvidia says CUDA Toolkit 3.2 provides a new CURAND library for random number generation at 10-20 times faster than the latest MKL, and new CUSPARSE library of sparse matrix routines that delivers 6-30 times faster performance than the latest MKL. And the new release also boasts a host of additional improvements to GPU debugging and performance analysis tools, according to the company.

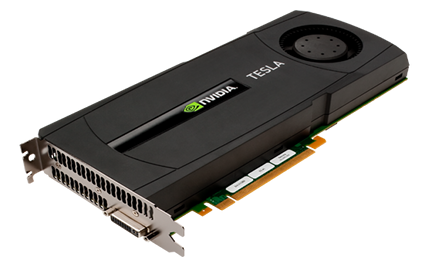

In addition, the new CUDA Toolkit 3.2 release is said to include H.264 encode/decode, new Tesla Compute Cluster (TCC) integration, cluster management features, and support for the new 6GB Nvidia Tesla and Quadro GPU products.

Nvidia's Tesla C2050 is a PCI Express board that adds the Fermi GPU, featuring 512 CUDA cores, to a Linux or Windows workstation

(Click to enlarge)

As we reported recently, Nvidia has announced that it will port the CUDA language, which currently runs on its current Fermi and forthcoming Kepler GPUs, directly to x86 chips. No doubt, performance will be much slower there, but the chipmaker apparently hopes such a move will drive sales of its GPUs.

Further information

Nvidia says it will host a webinar on Tuesday, Nov. 23 at 10:00 a.m. PT to review the new performance enhancements and capabilities of the new CUDA Toolkit. To register for the webinar, go here.

For further information and the latest CUDA Toolkit downloads, see the company's website, here.

Darryl K. Taft is a writer for eWEEK.

This article was originally published on LinuxDevices.com and has been donated to the open source community by QuinStreet Inc. Please visit LinuxToday.com for up-to-date news and articles about Linux and open source.