Updated review of robotics software platforms

Jul 29, 2008 — by LinuxDevices Staff — from the LinuxDevices Archive — 72 viewsForeword — Today's nascent robotics market has engendered nearly two dozen general-purpose software development frameworks, nearly all of which run on Linux. This article reviews ten, and briefly describes a few others, before concluding with an analysis of which platforms are best-suited to which uses.

This article builds upon a very similar robotics software review that we published last year. Both reviews were written by Michael Somby, a service robotics hobbyists who works as a professional control systems engineer. Enjoy!

A review by Michael Somby ([email protected])

What is special about service robotics as compared to industrial robotics? Imagine it gets dark in a living room, and you turn on the light. For most industrial robots, such a change in the illumination pattern would be a catastrophic event. Those robots are designed to work in closely controlled industrial settings. Their computer vision systems are not able to cope with such changes in the illumination without reconfiguration or reprogramming.

On the other hand, interacting with the dynamic and changing world is a survival requirement for most service robots.

Figure 1 — A personal service robot (Stanford University)

New pieces of furniture, random obstacles, walking people, missing or unknown objects, unprofessional users, children, pets, cars on the road — those features comprise the dynamic environment where a service robot needs to be able to operate. Besides that, most service robots are mobile and work in close proximity to people.

The unique requirements of service robotics triggered the creation of an industry which develops new technology specifically designed for service robotics applications. This article reviews several software products available on the market for service robotics applications.

Introduction

Building a robot which makes up hotel rooms, cleans houses, or serves breakfast is the dream of every service roboticist. A company which delivers such a product at a reasonable price will most likely make huge sales. Militaries are looking for robots which can be used to fight wars; farmers would love robots to grow crops.

Figure 2 — DaVinci Surgical Robotic System

Nearly every industry has a use for service robots:

- Robotic elder-care

- Automatic health scanning and monitoring (health-care)

- Robotic surgery (health-care)

- Robotic delivery (hotels, hospitals, restaurants, offices)

- Robotic crop picking (agriculture)

- Unmanned convoys (defense)

- Bomb disposal (defense)

- Security, patrols

- Entertainment (home, museums, parks)

- Cleaning

- Mining

- Fire fighting

- and so on

There are many potential applications, and the good news is that the technology is getting there.

Service Robots of Today

Although service robotics is a relatively young industry, it has gone through a number of iterations of continuous technology improvement. Tele-operation was probably the first step in the evolution of practical service robots. Many of today's deployed service robots are still remotely controlled by a human operator. Every motion of those robots is initiated and controlled by a human.

Figure 3 — Healthcare tele-presence robot (InTouch Technologies Inc)

(Click to enlarge)

The military robots deployed in Iraq and Afghanistan, successful robotic surgery robots, and health-care telepresence robots are good examples of remotely-controlled service robots.

Figure 4 — Armed Talon MAARS robot (Foster-Miller)

(Click to enlarge)

Introduction of autonomous navigation and obstacle avoidance took service robotics to the next level. Indoor navigation came first, followed by outdoor self-driving technology.

Indoor localization, obstacle avoidance, and navigation has been researched by the academy for many years, and has evolved into a mature technology. There are a growing number of robots on the market that can successfully find their way through typical office or home settings.

Figure 5 — Indoor mobile robots (MobileRobots Inc)

Indoor navigation technology relies heavily on laser range finders, infrared/ultrasound obstacle detectors, touch sensors, computer vision algorithms, and occupancy maps.

Figure 6 — Warehouse robots (KIVA Systems)

Outdoor robotic driving technology was propelled by three DARPA robotic car races. The competitions triggered the creation of several technologies such as LADAR data processing and associated computer vision algorithms.

Figure 7 — Stanley, the winner of 2005 DARPA Grand Challenge

(Click to enlarge)

Today, a growing number of companies offer autonomous indoor service robots and outdoor unmanned vehicles (UVs), including commercially successful indoor robotic vacuum cleaners and warehouse robots, robotic lawn mowers, delivery robots, agricultural robotic vehicles, and robotic car strap-on kits.

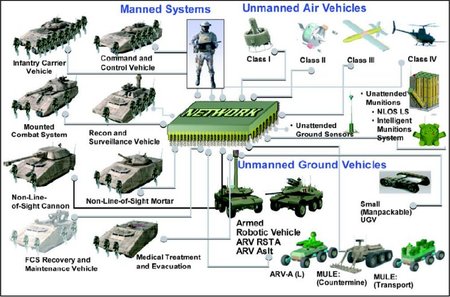

Military unmanned vehicles are rapidly becoming multi-purpose reconfigurable platforms that can be fitted with various mission-specific payload modules. Depending on the mission, unmanned vehicles can carry payloads that detect intrusions, detect enemy fire, detect sniper scopes, attack enemies, or check RFID tags attached to controlled items. Autonomous mobile robots can be programmed to work together with other robots or humans as a team.

Figure 8 — Unmanned Ground Vehicle (General Dynamics Robotic Systems)

The best example of autonomous mobile robots being implemented on a massive scale is Future Combat Systems (FCS), one of the Pentagon's ambitious modernization programs. FCS defines how a number of manned and unmanned platforms carrying various payloads will work together in the future network-centric battle space. Some of the areas being addressed include common architectures (4D/RCS), mission planning, interpretability of the systems (JAUS, STANAG 4586), smart sensors/payloads, data sharing and exchange (C4I).

Figure 9 — FCS MULE UGV

Figure 10 — Future Combat Systems

Although the Future Combat Systems program is still referred to as a program of the “future,” it turned out that it might be harder to build a robot that can reliably fetch a beer from a refrigerator in a typical home than building an unmanned combat vehicle that can search for, identify, and blow up a SCUD missile launcher. The service robotics technology had to move beyond autonomous navigation by introducing learning and social service robots, the next iteration of the technology.

Figure 11 — Dexter robot (UMass, Amherst)

Learning technology was the key enabler that allowed robots to intelligently and autonomously utilize robotic arm manipulators in dynamic environments. The latest versions of learning control systems can discover object properties, interact with humans, and even guess human intentions.

Figure 12 — Service robot training (Skilligent LLC)

The software that guides learning and social robots is based on a new principle that departs dramatically from the traditional approaches, as it enables the robots to learn new missions/behaviors directly from humans without reprogramming. Instead of defining “what the robot needs to do,” the software defines “how the robot will learn what it needs to do.”

Figure 13 — A trained service robot doing its job autonomously (Skilligent LLC)

Imagine you are a lucky guy who just got a service robot. You want the robot to make up your room everyday, pick oranges in your garden, or palletize boxes in your garage. As the robot has just arrived at your place, you would expect the robot to learn from you all the things you want it to do. The scenario has become a reality with advances of commercial control systems specifically designed for learning service robots and the multitude of research projects in the domain.

Figure 14 — A tele-operated personal robot fetches a beer (Stanford University)

Robot training is somewhat different from programming, as it does not require technical or programming expertise from the trainer. That is why the learning robots need to be social — they have to be able to engage humans directly by initiating hardwired reactions in human brains.

Figure 15 — Social robot Leo (MIT)

(Click to enlarge)

From the first glance, learning and social robots might not look as cool as the military unmanned vehicles. Nevertheless, the level of autonomy of service robots must be significantly higher; it took a breakthrough in the technology in order to build a robot that can intelligently utilize its arm or learn what gestures and facial expressions to use in order to communicate with a human.

This brief history of the evolution of service robotics has set the stage for the following overview of state-of-the-art robotic software products. The packages described below can be considered a productized source of robotic intelligence.

Overview of Software Robotic Platforms

Today's service robotics market is often compared to the early PC market. As so, a number of companies are trying to become the “Next Microsoft in Robotics” and build a standard robotic software platform, often referred to as “robotic operating system.” It is fascinating to see that Microsoft is also in the game with its own robotic software platform, called Microsoft Robotics Developer Studio.

As a result of the gold rush, several robotic software platforms emerged on the market; the products are competing and mostly incompatible (similar to the early PC market). The table below provides a summary of the relevant features of several of the most prominent market players. Remember that there are at least ten other software platforms out there that are not listed in the table.

| x | Microsoft Robotics Studio 1.5 | Mobile Robots | Skilligent | iRobot AWARE 2.0 | Gostai Urbi | Evolution Robotics ERSP 3.1 | OROCOS | Player , Stage, Gazebo |

|---|---|---|---|---|---|---|---|---|

| Open Source | No | No | No | No | Partial | No | Yes | Yes |

| Free of Charge | Edu/hby | No | No | No | Selected platforms | No | Yes | Yes |

| Windows | Yes | Yes | Yes | ??? | Yes | Yes | No | Yes (simul. only) |

| Linux | No | Yes | Yes | Yes | Yes | Yes | Yes | Yes |

| Distributed Services Architecture | Yes | No | Yes | Yes | Yes | No | No | Yes (limited) |

| Fault-Tolerance | No | No | Yes | Yes | No | No | No | No |

| JAUS Compliant | No | No | Yes | Yes (???) | No | No | No | No |

| Graphical OCU | Yes (Web) | Yes | Yes | Yes | Yes | Yes | No | No |

| Graphical Drag-n-Drop IDE | Yes | No | No | No | Yes | Yes | No | No |

| Built-in Robotic Arm Control | No | Yes | Yes | Yes | No | No | Yes | No |

| Built-in Visual Object Recognition | No | No | Yes | No | No | Yes | No | No |

| Built-in Localization System | No | Yes | Yes | No (pieces only) | No | Yes | No | No |

| Robot Learning and Social Interaction | No | No | Yes | No | No | No | No | No |

| Simulation Environment | Yes | Yes | No | Yes | Yes (Webots) | No | No | Yes |

| Reusable Service Building Blocks | Yes | Yes | Yes | Yes | Yes | Yes | Yes | No |

| Real-Time | No | No | No (Planned) | No | No | No | Yes | No |

The featured software products can be logically split into two categories — “pure software frameworks” and “off-the-shelf robotic brains”:

- The products from the “purists” category (Microsoft, Gostai, OROCOS, and Player) provide runtime environments, hardware abstraction layers, development and debugging tools, and simulation environments to engineers/customers who are actually building “robotic brains” using the platforms as programming frameworks.

- The products from the second category (MobileRobots, Skilligent) represent complete robotic intelligence systems (“robotic brains”) that can be installed on a robot to make it autonomous. Those complete control systems are built for the end user of service robots rather than for programmers.

- There are products “in between” (Evolution Robotics, iRobot AWARE) that require application-level/mission-level programming to turn them into complete “robotic brains.”

Some products from different categories can be integrated. For example, the Skilligent software can run as a standalone system, or on the top of the Microsoft platform.

Additional observations:

- Two of the systems (OROCOS and Player/Stage) are free and open source.

- Most systems support both Linux and Windows. The major exceptions are Microsoft Robotics Developer Studio, which is a Windows-only platform, and OROCOS which is a real-time-oriented, Linux-only system.

- Two of the reviewed systems support fault-tolerance — iRobot AWARE 2.0 and Skilligent. Those software packages are suitable for building redundant vehicle control systems that can tolerate major electronics failure, such as a broken computer or a faulty sensor.

- JAUS is supported by Skilligent and (unconfirmed) by iRobot AWARE 2.0

- Most systems support distributed services architectures that simplify putting together robotic applications from large, loosely coupled software components potentially running on networked computers.

- Skilligent is the only vendor whose system can be trained by a non-professional user through a robot learning and social interaction method.

- MobileRobots offers one of the most mature indoor navigation, obstacle avoidance, and mapping systems. It relies heavily on laser range scanners carried by the robot.

- Built-in indoor navigation/localization capabilities are present in the MobileRobots system, Evolution Robotics system, and in the Skilligent system.

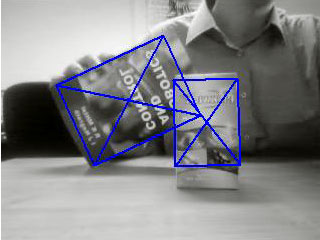

- Built-in visual object recognition capabilities are provided by the Evolution Robotics system (SIFT algorithm) and the Skilligent system (improved Harris Corner Detector algorithm). The rest of the vendors offer third-party software for these purposes.

- Graphical OCUs (Operator Control Units), drag-n-drop graphical development environments, simulation environments, and libraries of reusable service building blocks are present in most systems in one form or another. Those features are becoming the “must have” ones for all robotic software platforms.

- Two companies on the list (Mobile Robots and iRobot) created their software products primarily for use with their own hardware robotic platforms. Although the software is specifically built for those particular hardware platforms, it is designed in a hardware-agnostic fashion to simplify integration with new robots.

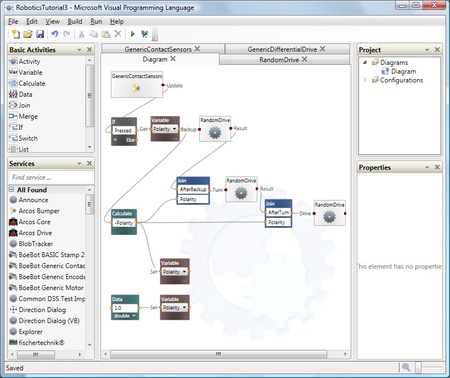

Microsoft launched its own robotic software platform called Microsoft Robotics Developer Studio (MSRS).

Microsoft Robotics Developer Studio is an example of the “purists” approach. It provides a runtime environment, a graphical drag-n-drop service creation environment and a simulation environment, but does not have its own built-in “artificial intelligence” components, such as a computer vision system, a navigation system, or a robot learning system. Instead, it relies on a growing number of partners that supply the “intelligence content” for the framework.

A runtime environment called DSS (Decentralized Software Services) underpins MSRS. It connects together software modules called services running locally or on the network (REST). For example, a navigation system could be treated as a service, and a computer vision system would be a different service. The services are loosely coupled and typically do not know about each other's existence.

Application-specific code orchestrates those services, sends queries, and receives responses back — and thus controls the robot. If a service is located on a remote server, a patented network protocol called DSSP is used to communicate to the server.

As all the services are running in parallel, there is a chance that the application-level orchestration code may get confused by multiple responses simultaneously coming from various services. The answer to the problem is a special library called CCR (Concurrency and Coordination Runtime) that provides a consistent and scalable way to program asynchronous operations and coordinate among multiple responses. DSS makes use of CCR.

Figure 16 — Microsoft Visual Programming Language (VPL)

(Click to enlarge)

The runtime environment comes with a graphical programming language called VPL that is used to orchestrate multiple asynchronous invocations of decentralized services managed by the DSS/CCR framework. VPL is domain independent and can be used outside of the robotics domain.

The robotics-specific portion of the Microsoft robotic platform consists of a robotic simulation environment, a set of robotics tutorials with sample code, and a nice online courseware package. The platform also defines a set of APIs for standard robotic services, such as a web camera service. The API is a form of hardware abstraction layer (HAL).

Figure 17 — Microsoft Simulation environment

(Click to enlarge)

Originally greeted with a mixture of enthusiasm, caution, and skepticism by the world of robotics researchers and hobbyists, the MSRS platform turned out to be overkill for small hobby/research projects. Writing DSS services and connecting them to the platform is a major exercise in service-oriented programming, and one that is often an unnecessary distraction from the final goal of a robotics project. And, in the end, one still has to look for a third-party library to handle, for example, object recognition, as the platform does not have any built-in “artificial intelligence.”

On the other hand, if a robot (simulated or real) comes with a package of DSS services already built for it, the robot can be easily programmed using VPL graphical language (mission-level programming). This helps, especially if the platform is used for education.

The platform's design is heavily influenced by WebServices/SOAP/REST architecture which was not originally meant for low-latency applications such as real-time vehicle control. In general, traditional Web technologies are optimized for traffic volume rather than for achieving the lowest latency. The problem is somewhat worsened by the .NET Framework's memory garbage collector, a source of random delays. How well the IT-style architecture of the Microsoft platform fits the real-time (or even “soft” real-time) domain is yet to be fully uncovered.

Several companies and teams use the Microsoft platform with success. Robosoft, a company building autonomous robots, uses it as a software framework for all of their robots. Coroware uses MSRS as a programming and simulation environment for their CoroBot robots, built for the research and education market. Princeton Urban Grand Challenge team used MSRS as a software framework for a control system built for their unmanned ground vehicle (pictured below).

Figure 18 — Prinston Urban Grand Challenge unmanned vehicle

MobileRobots

MobileRobots produces autonomous robots that are the de-facto standard robotic platforms for researchers. The company also diversified into service robotics by introducing delivery robots, security patrol robots, and tele-presence robots.

Figure 19 — Robots manufactured by MobileRobots

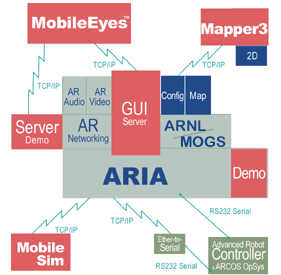

Besides designing the nice hardware, autonomous indoor navigation technology is the core competency of MobileRobots. The company has developed a suite of technologies including both software and sensors that enable the mobile robots to reliably navigate and explore various indoor (or indoor-like) environments. As a result, the software platform demonstrates great performance when it comes to autonomous indoor navigation.

Figure 20 — Architecture of MobileRobots' software

The API of the platform is called ARIA (Advanced Robotics Interface Application). The core software package is called Autonomous Robotic Navigation & Localization (ARNL). ARNL enables the robots to navigate both indoor and outdoor environments with high precision. The software package makes heavy use of laser range scanners, encoders on wheels, and, optionally, inertial measurement units (IMU), video cameras, and GPS sensors (outdoor).

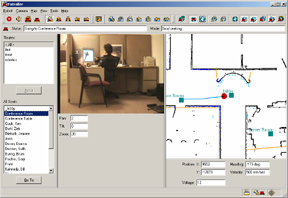

Figure 21 — MobileEyes software, an operator control unit (OCU) software

An OCU software package called MobileEyes comes with the platform. It allows controlling a robot wirelessly; it is enough to click on a map to send a robot to a particular destination on the map. A simulation environment, MobileSim, is based on an open-source Stage simulator (discussed below).

The platform comes with three computer vision software packages. A package called Advanced Color Tracking Software (ACTS) allows color tracking over a wider variety of lighting conditions. The other packages include omnivision dewarping and stereovision range finding software.

The platform does not have its own graphical drag-n-drop service creation environment like other products, but a software program called TrainingFactory allows setting up typical task/mission plans (e.g. patrolling or delivery) in an intuitive way, without programming.

Figure 22 — Defining a new task for a robot in MobileRobots' TrainingFactory

Skilligent

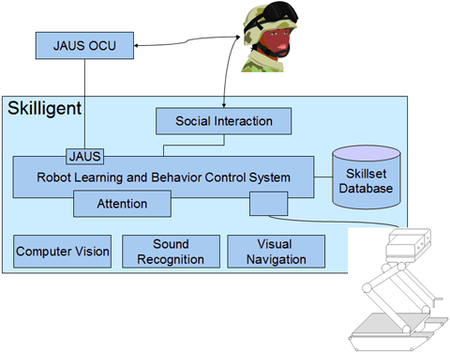

Skilligent is a leader in commercial robot learning and social interaction technology. Their product called Robot Learning and Behavior Control System is a complete robotic intelligence system for learning multi-task service robots with the capability of utilizing robotic arm manipulators.

Figure 23 — A robot controlled by Skilligent software uses its arm to grab an object from a trainer's hand

The philosophy of the product is to eliminate programming by introducing a fully trainable control system. After integrating the software into a robot (this still might require programming), a user starts communicating to the robot via gestures, attracting its attention to objects or places, and invoking “reflexive behaviors” through known stimuli. By interacting with the robot and making demonstrations, the user can develop new behaviors and new stimuli in the robot. The iterative training process keeps going until the robot is trained enough to do its job autonomously.

Figure 24 — Architecture of the Skilligent software

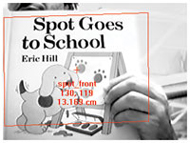

Skilligent developed a robot vision system capable of recognizing objects under real life conditions from a large database of known objects. The same vision system recognizes gestures used for robot training, and provides multiple bottom-up visual attention signals. A bottom-up visual attention system is capable of selecting objects that might be relevant to the current task being learned. The object representations are then stored in an image database integrated with a database of known skills and behaviors.

Figure 25 — Skilligent software is tracking two objects

A visual robot navigation system that comes with the product allows a robot or a UAV to navigate in known environments. The environments are explored and mapped out during a training session. Also, there is a way to create digital maps from existing images of a territory (e.g. for aerial image-based navigation). The navigation system uses a sequence of observed visual landmarks for position triangulation. Between the observations, the system uses a dead-reckoning mechanism to estimate the robot's position.

A combination of the generalization algorithms is the key to the robot learning technology. For example, during a robot training session, a robot learns from a human how to perform a task. The task learning algorithms build a generalized task representation as a sequence of steps, decision points, conditions, and hierarchical skills. The generalized representation is totally hidden from the end user. The only way for a user to check that the robot's internal task representation is correct is to actually instruct the robot to perform the task autonomously. While observing how the robot acts autonomously, the trainer checks if the generalized task representation is correct (“the robot has learned what it needs to do”). If not, the trainer can interrupt the robot at any time and make additional demonstrations. The additional input causes the task learning algorithms to update the internal generalized task representation. The iterative process goes on until the robot “learns what it needs to do;” in technical terms, this means that the generalized task representation causes the robot to behave in a way that matches the expectations of the trainer.

This iterative process could be called robot programming by demonstration, but as the user does not have to know what is really going on in the robot's software, the term “programming” might not be fully appropriate. Instead, Skilligent uses the term “robot training,” which underlines the fact that the user does not really have to know how the software works in order to successfully train the robot. This quality ensures that a non-professional user (such as a warehouse worker) can successfully train the robot.

The Skilligent software product is designed for multi-task service robots. This means that the robots can be adapted to new tasks without reprogramming. The robot learning technology is the key factor that makes it possible. The software relies on multiple artificial intelligence algorithms working in parallel. It requires a pretty powerful CPU, such as Intel Core Duo, to allow the robot to work on the same timescale as a human trainer. This implies that a robot that uses the software must be controlled by a laptop-class motherboard or a wirelessly connected desktop computer.

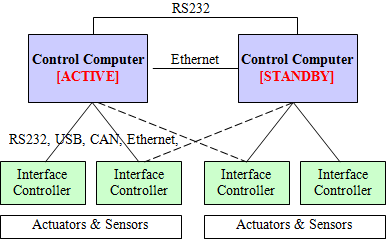

The second major product of Skilligent is called Fault-Tolerant Control Framework, a JAUS-compliant “pure” robotic software platform targeted at fault-tolerant vehicle control systems. The platform is said to be originally designed as a software framework for highly redundant avionics and communications systems.

Figure 26 — Redundant electronics configuration running under control of the Skilligent software

The platform has a built-in mechanism for handling hardware and software faults, including failures of control computers and sensors. The system has a built-in distributed shared memory facility that automatically replicates critical mission state information from an active computer to one or more standby computers. In addition, the distributed shared memory synchronizes memory caches across a cluster of control computers. A low-latency UDP protocol is used for data synchronization. The software platform is optimized for building reliable distributed control systems.

Realizing that a fault-tolerant platform might be overkill for many service robotics projects that do not require redundancy and high reliability due to cost limitations, Skilligent created a lightweight version of the framework with most fault-tolerance features disabled. The lightweight platform is included into the Robot Learning and Behavior Control System, the “artificial intelligence package,”

In addition, Skilligent integrated its Robot Learning and Behavior Control System with Microsoft Robotics Developer Studio. Integration with Gostai Urbi, another “pure” platform, is on the roadmap.

The Skilligent product comes closest to the goal of building a complete software robotic brain that comes with everything needed to add off-the-shelf intelligence to a service robot.

iRobot AWARE 2.0

iRobot's AWARE 2.0 is a software robotic platform developed by iRobot for a range of military robots produced by the company.

iRobot has a large base of robots already deployed with the US Army, law enforcement agencies and foreign militaries. iRobot encourages third party developers to build add-ons to the robots. If you want to sell your payloads or technology through iRobot to their customers, you have to integrate your product with AWARE — and pay the related license fees.

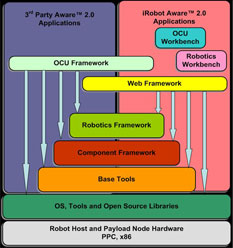

Figure 27 — Architecture of iRobot AWARE 2.0 software

There are two versions of the platform:

- An “Integrator version” supports Python scripting as the only means of creating robotic applications — primarily as a glue code to test a new payload. The version costs less and is targeted at users willing to build quick proof-of-concept applications using simple Python scripts.

- The full version, called Enterprise edition, provides a full-blown C++ development environment aimed at building high-performance, fully-integrated native applications. The development environment is claimed to be the same as that used internally by the iRobot engineering team.

The platform has a built-in data messaging system designed around a publisher-subscriber model. Interested software modules running somewhere in the onboard network can subscribe to updates coming from a particular publisher, for example, a sensor. The multicasting nature of the messaging system allows building process-level fault-tolerant architectures. The software platform comes with many built-in functions for controlling robotic manipulators, including handling of self-collision avoidance, inverse kinematics, and remote control from an OCU. All other advanced artificial intelligence functions such as a computer vision or autonomous navigation are outside of the scope of AWARE, and provided by external payload modules.

A growing number of companies offer payload modules compatible with AWARE. iRobot runs a third-party developers program aimed at helping interested parties integrate new technologies into the family of military robots (Warrior, PackBot, and SUGV). AWARE platform acts as software glue and a common denominator for multiple hardware and software components built by the third parties.

Unlike other software platform vendors, iRobot offers a clear path to revenues for third-party developers.

Gostai Urbi

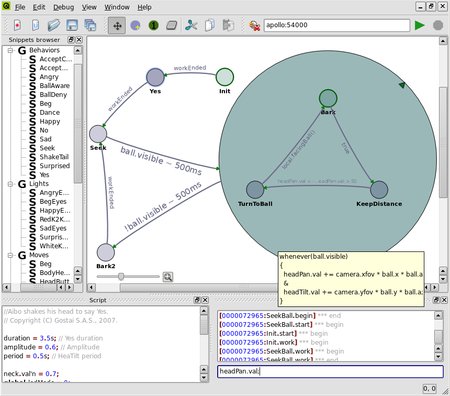

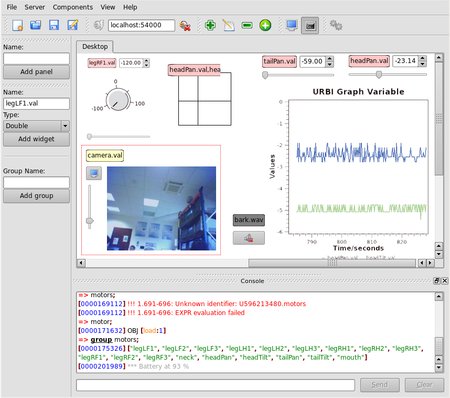

Urbi is a robotic software platform built by Gostai. It includes a runtime environment, a nice set of development tools, an OCU program, and a hardware abstraction layer for several robots. One of the great features of the platform is its accompanying set of graphical development tools called Urbi Studio, a toolsuite aimed at simplifying the creation of robotics applications. The tools are called urbiLive, urbiMove, and urbiLab.

Figure 28 — Screenshot of urbiLive, a graphical programming environment

(Click to enlarge)

The centerpiece of Urbi Studio is urbiLive, a graphical drag-n-drop environment that helps design behaviors for robots. The tool converts graphical behavior diagrams into urbiScript, a scripting language interpreted by Urbi's runtime engine.

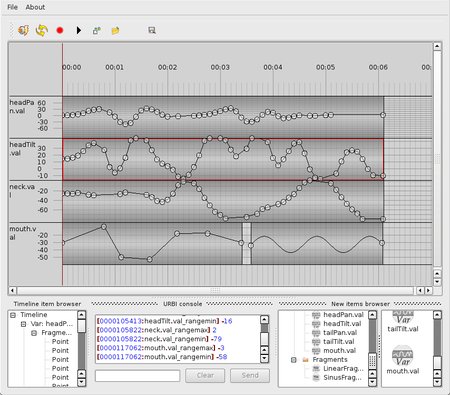

Figure 29 — Screenshot of urbiMove, an animations editor

(Click to enlarge)

A program called urbiMove is used to graphically design animations, dances, and postures for various robots, or to record movements and replay them later.

Figure 30 — Screenshot of urbiLab, an OCU program

(Click to enlarge)

urbiLab is a customizable graphical OCU (operator control unit) for robots compatible with Urbi.

Figure 31 — Webots, a simulation environment (Cyberbotics)

(Click to enlarge)

Urbi does not have its own simulation environment. Instead, it relies on a popular commercial simulation environment called Webots sold by Cyberbotics.

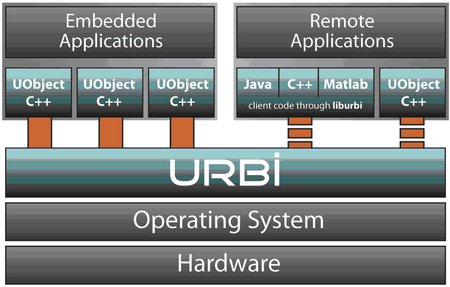

Figure 32 — Architecture of URBI software

(Click to enlarge)

Gostai's novel scripting language, urbiScript, features dedicated abstractions to handle parallelism and event-based programming from within C++, Java, or Matlab. A distributed component architecture called UObject allows creating robotic applications from high-level service building bocks potentially running on networked computers. The runtime engine orchestrates those loosely coupled services.

Urbi is a pure software platform that provides a clean programming framework for engineers and researchers. The “intelligence components” such as computer vision, robot learning, navigation, and object recognition are provided by Gostai's partners.

Evolution Robotics ERSP 3.1

Evolution Robotics was one of the first companies to introduce a commercial implementation of a generic software robotic platform.

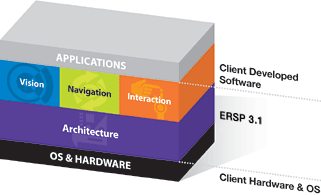

Figure 33 — Architecture of ERSP 3.1 software

Their product, called ERSP combines a cross-platform runtime environment, a graphical drag-n-drop development environment, a visual object recognition system (ViRP) and a vision-based localization and mapping (vSLAM) system. The computer vision system and the SLAM system are based on SIFT algorithm invented by David Lowe.

Figure 34 — ViRP software

The recent innovation from Evolution Robotics is called NorthStar. This robot localization system is based on an infrared beacon technology.

The platform comes with a graphical toolkit that can be used for building programs based on a number of reusable software building blocks. The building blocks — called behaviors — are activated/deactivated in run time by a higher-level program called a task. ERSP runs on both Windows and Linux.

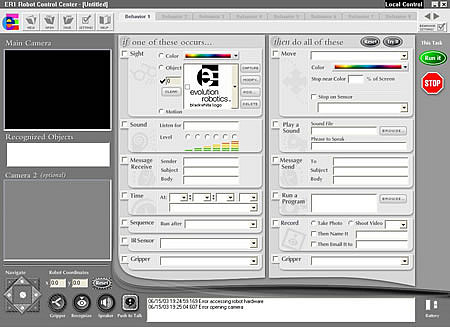

Figure 35 — A screenshot of Evolution Robotics's graphical programming environment

Besides the graphical toolkit, ERSP supports development of programs in Python scripting language.

Figure 36 — A screenshot of Evolution Robotics's Robot Control Center, an OCU program

A program called Robot Control Center allows defining missions/tasks for the ER1 personal robot as sequences of IF-THEN statements. Besides that, the application works as an operator control unit (OCU) that allows controlling an ER1 robot remotely.

ERSP does not come with a simulation environment, and does not support distributed services architecture. Although ERSP is a mature system, it does not seem to be an actively developed product, as the company has not made new releases in a while.

Evolution Robotics owns a patent on an invention called hardware abstraction layer (HAL) for a robot (US Patent 7302312) which “permits robot control software to be written in a robot-independent manner.” As most software products described in this article have some sort of HAL, the vendors should be looking for an appropriate prior art.

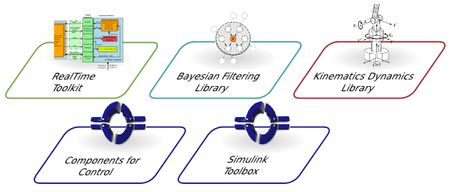

OROCOS

OROCOS is an open source set of libraries for advanced motion and robot control. OROCOS comes with its own runtime environment optimized for real-time applications. The environment makes use of lock-free buffers, making it suitable for deterministic real-time applications.

Figure 37 — Architecture of OROCOS software

OROCOS comes with a set of reusable components and a set of drivers for selected robotic hardware. A kinematics and dynamics library is an application independent framework for modeling and computation of kinematics chains, such as robots, biomechanical human models, computer-animated figures and machine tools.

OROCOS does not come with a graphical drag-n-drop development environment or a simulation environment.

The Berlin Racing Team of the 2007 Urban Grand Challenge Competition used the OROCOS Real-Time Toolkit as framework for building their vehicle control system. They were one of the semifinalists selected by DARPA. The team's web site talks about using OROCOS.

Figure 38 — An unmanned ground vehicle of Berlin Racing Team, a participant in Urban Grand Challenge

(Click to enlarge)

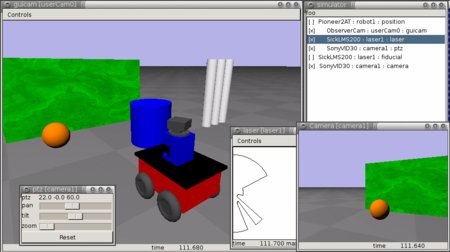

Player, Stage, Gazebo

The Player is a popular open source TCP/IP-based hardware abstraction layer for a growing number of robotic hardware modules. The Stage and Gazebo provide accompanying simulation environments. The environments support multi-robot simulations suitable for research in swarm- or team-working of robots.

Figure 39 — Player, Stage and Gazebo

(Click to enlarge)

Running on a robot, Player provides a simple interface to the robot's sensors and actuators over the IP network. Data can be read from the sensors, and written to actuators, for on-the-fly configuration. A number of open source software modules have been developed for Player, including computer vision and navigation/mapping modules.

Other platforms

There are several other robotic software platforms available on the market — such as CLARAty, YARP, Pyro and Edinburgh Robotics' DevBot. The author has not had a chance to study those platforms closer. Here is some information:

- CLARAty is a software platform built by NASA and then released as an open source project. The software seems to provide a set of interesting algorithms such as visual heading and pose estimations, and visual wheel sinking estimation.

- Edinburgh Robotics DevBot is a robotic software platform that simplifies development of distributed robotic control systems by providing a unified distributed hardware abstraction layer.

- Pyro (Python Robotics) is an open source Python-based robot programming environment. It comes with a nice curriculum, so it can be used for classroom robotics.

- YARP (“Yet Another Robot Platform”) is an open source set of libraries, protocols, and tools to keep modules and devices cleanly decoupled.

Importance of JAUS standard

The U.S. Department of Defense is the world's largest single buyer of service robots. JAUS is mandated for use by all of the programs in the DoD Joint Ground Robotics Enterprise (JGRE). This basically means that if a company wants to sell robots to the DoD, the robots must be JAUS-compatible. Even if you are not planning to sell your robots to the DoD, it probably still makes sense to support JAUS, just in case.

JAUS stands for Joint Architecture for Unmanned Systems. Although it is called an “architecture,” it can be treated as a UDP/RS232 binary protocol primarily used today for communication between mobile ground robots and their OCUs (operator control units). JAUS can be used as a standard interface for connecting new payloads to reconfigurable robotic platforms. It can also be used as a protocol for internal communication between various modules of a robotic control system — that is when JAUS can be thought of as an “architecture.”

There is controversy surrounding JAUS, caused by an (unconfirmed) fact that all JAUS implementation might fall under ITAR (International Traffic in Arms Regulations), which restrict the export of defense articles and services. When the controversy is cleared, a greater number of the vendors of software robotic platforms are expected to declare their support of JAUS.

There are open source and commercial implementations of JAUS.

Conclusion

So, what is the best software package? The judges are still out.

If you are using robotics for education purposes and Lego Mindstorms does not fit your curriculum, I would recommend Microsoft Robotics Studio. The Visual Programming Language and the Simulation Environment would be an easy way to script your robots.

If you are a robot designer looking for a complete robotic intelligence package for your service robot, my vote definitely goes to Skilligent. Their software product has everything needed to turn a bunch of motors, actuators, cameras, and sensors into a learning and social creature. And you do not have to be a software guru to make it work.

If you are a researcher looking for a robotic platform, check if there is a MobileRobots platform that fits your budget. These robots will not break in two weeks, and they come with full indoor/outdoor navigation capabilities.

If buying the hardware is not in your plans, take a look at Gostai URBI software, a clean and easy software framework for robot programming. ERSP would be a good choice if your research requires vision/navigation capabilities. If you are a researcher working in the area of robot programming by demonstration, social robot-to-human interaction or behavior coordination and control, Skilligent software package will give you a good start. Finally, if your research budget approaches zero, take a look at Player/Stage, OROCOS, or Microsoft Robotics Studio.

If you are thinking about actually using a robot to automate delivery processes or security, MobileRobots would be a place to go.

If you are a creator of the latest sensor/actuator technology, make sure your product is compatible with iRobot AWARE 2.0. If they like your technology, they might want to resell it to their customers in the defense industry, and you would get a chance to make money that way. Be ready to pay some license fees up front in order to get into the game.

If you are building a real-time vehicle control system, check out OROCOS, which was designed in real-time fashion from the very beginning. Skilligent would be your option if you also want to achieve fault-tolerance via redundancy.

Obviously, the market for service robot platforms is still fairly young. Yet, as this article shows, there are already quite a few options for developers interested in helping to advance the field. With luck, the coming year will bring even more variety, and further advances in this fascinating field.

About the Author — Michael Somby is a professional control systems engineer. In his leisure time, he is a long-time enthusiast of service robotics and artificial intelligence. His email address is [email protected].

This article was originally published on LinuxDevices.com and has been donated to the open source community by QuinStreet Inc. Please visit LinuxToday.com for up-to-date news and articles about Linux and open source.